Most software developers think translation is just replacing a string on a user interface. Unfortunately, it is not so simple. First of all because strings are not necessarily equivalent on a one-on-one basis. “Cancelling” an operation on a ticketing website is translated differently from “cancelling” a flight. With the use of placeholders comes added complexity: while in most languages you can find the perfect equivalent of “%n translations”, in some languages there are differences if %n equals 2 or %n equals 3, etc.

The following article describes best practices for software developers that wish to embark on the journey of continuous localization. We will go over the lifecycle of a single source string and we will assume that there is only one target string for each source string in each language.

The goal: Continuous localization

Continuous localization is all about meeting tight deadlines. In order to achieve the ideal situation, we require the following:

- The translator to be able to translate without having to ask questions

- The translated string to display correctly and not to require in-context review

- The user to see all strings in the language of their choice

We believe that careful preparation of the content for translation reduces the total time it takes to get to the desired results, because the communication and checking overhead is more than a correct preparation.

Step 1: Source string review and enrichment

In this step, the original source string changes to a reviewed source string, no matter what the source language is — though for most readers this will be English. For training purposes, it’s not a bad idea to store both the original source string and the reviewed source string, to detect potential errors.

Most of the source strings contain an actual string and a key that indicates where the source string appears. Many keys are self-explanatory while others are not, especially if we are talking about lesser-used areas of a software that deal for example with integrations with other software products. In this case, the terminology and use of these tools might not be very clear to the translators.

In this step, the source string may be changed, and the identifier enriched with a description. It is recommended to make the source string more explicit in case of ambiguity, especially if you are not short on screen estate.

The source string should be reviewed by a person who natively speaks a language other than English and has experience with translating user interfaces. It’s better if the person is equipped to search for content in the code, i.e. be able to generate a screenshot, or understand what a certain key means, otherwise this person might need the assistance of a developer. During source string review, this person should ask themselves the following questions: In how many ways can I translate this string into my native language? Do all translations mean the same? How do I know which one to pick? If they detect ambiguity, it’s better to rephrase the string or add information on how to translate it accurately.

The outcome will be a set of strings where some strings may have been changed, and some strings have been enriched with context (potentially a screenshot).

PRO TIP

It is recommended to consider aligning this with functional testing.

INSIGHTS

Source string review is a critical step but not very popular: A survey we carried out in April-May 2019 revealed that only 20.7% of enterprises (translation buyers) applied source string review in continuous workflows. The figure dropped down even further for language service providers, with only 18.8% carrying out source language editing in their most complex workflow.

I presume this may be rooted in the setup of translation management systems, given most of them are not able to accommodate same-language tasks as a preparatory step in multilingual workflows. Still, I recommend that you find a way to experiment with this.

Step 2: Pseudotranslation

The industry’s best-kept secret is pseudotranslation, a functionality that is offered out of the box by almost every respected translation tool. It implies taking a source string and replacing it with other characters, though not completely different characters. For instance, replacing the vowels with a random accented version, doubling certain letters, entering some non-breaking spaces here and there (remember, you may need to display those correctly for French), etc.

http://www.pseudolocalize.com/, a free online service, converted pseudotranslation to [!!! Þƨèúδôℓôçáℓïƺáƭïôñ ℓôřè !!!]

Pseudotranslation helps test whether you can display international characters in your application and whether all strings have been extracted for translation. It is definitely an underrated functionality by companies of all sizes: I have seen the Microsoft Azure profile page have difficulties displaying the á in my name in 2019!

If you see a normal English string anywhere in the application after pseudotranslating it – well, that’s a bad thing, because then not every string is internationalized. You as a developer better correct it and do the pseudotranslation again.

PRO TIP

There’s no particular requirement for the profile of such task, but somebody with a clinical eye to spot things is generally a safe bet.

INSIGHTS

Pseudotranslation is not a very popular practice among companies, though it should be. The results in our survey showed that only 15% pseudotranslated every string while 23% did not perform any pseudotranslation at all — the rest did it occasionally.

Depending on your needs, you may switch the order of step 1 and step 2. I recommend source string review and enrichment before pseudotranslation to get the content right in one language before embarking on the localization journey, but if you have short deadlines for localization testing, it may make sense to pseudotranslate (which is immediate) before source editing (which takes time).

Step 3: Machine translation

This area is more familiar with translation managers. I will avoid discussing things such what’s the best MT engine for your needs, how to train it or what’s the expected ROI. For the moment, just think of machine translation as a “better-than-keeping-it-in-English” ready-to-use solution.

You can use a popular MT engine such as Microsoft and Google and even train them relatively simply with your own data, provided you have translation memories (ask your vendor), or structural files such as RESX, Java properties, XML, YAML, JSON, etc. In the case of structural files, you need to do the so-called structural alignment, which basically matches strings in multiple files according to the key.

PRO TIP

Before you are tempted to build your own matching, be aware that tools like memoQ can do it for you and export a TMX that you can then easily import to Google or Microsoft.

If you have more time and budget, consider inten.to. It’s an API that allows you to use a single call and routes it to the best MT engine for a certain language pair. What else could a middleware provider recommend if not another middleware?!

Step 4: Human translation

The last step in the life cycle it’s when the great quality human translation is implemented in the codebase. The first three steps can actually happen before the strings are assigned to human translators — as you may decide that not all content is translated by humans but simply the most critical to your business. You can also decide whether you want translation or machine translation post-editing. One requires only the source, the other requires a bilingual file format such as XLIFF.

You may actually put the source content into a translation management system prior to step 2, and then the rest of the process can be easier – also, just be aware that translators and translation companies usually prefer XLIFF generated by translation tools to XLIFF generated by developer code, because XLIFF 1.2 was not particularly rich in functionality, so it got a lot of extensions, whereas XLIFF 2.x never really got wide-spread, because when it came out it only solved problems that were already obsolete, because all the main translation tools solved them before the update of the standard, they have spent a lot of money, and then they would have needed to spend more money to support the standard.

PRO TIP

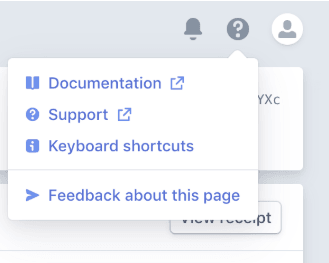

If possible, allow users to provide feedback to your content. Stripe does this in some fashion! They feature a small window on every page where you can simply click and offer feedback. This can be very useful if you decide to use a combination of machine and human translation, as feedback can help speed up the revision/amendment of certain content when necessary.

+1 step: Ensure communication channels work

If you can, accommodate this extra step! Translators are perhaps the only people who read all your content. They can certainly provide quality feedback, but will only do so if there is a simple way to do it. Therefore, make it easy, pave the way!

Most of you are probably thinking the only way to do it is by giving translators access to your issue tracking system. However, in order to save copy-pasting effort, I would suggest setting up a common Google Sheet so that they don’t have to send feedback to the project manager, who in turn sends feedback to you, for you to finally copy in everything to your systems.

PRO TIP

Linguistic quality assurance (LQA) is not the key here, but process improvement. While every translator makes mistakes every now and then, generally most errors come from the fact that they don’t understand the source text, which is a fault in your process, or because they don’t have easy access to the resources you want them to use — generally a project management issue at your language service provider (they don’t receive them) or an overcomplication of system access in your setup (they don’t want to spend time to use them as nobody really pays for the extra hassle).

Summary

In all earnest, these steps are probably easier said than done. Currently, I am not aware of any single tool that would take your strings through such a workflow without any customization (if you do, please contact me!).

Key points

- Define who is responsible for which step, what is done in-house and what is outsourced.

- Ask your translation department what translation management system they prefer to use. What may be logical for a developer may be a bit oversimplified for a linguist, and functionalities such as terminology management, concordance or predictive typing may be crucial to a good translation.

- Remember that the process and technical capabilities may define the quality of translations. If you don’t get those right at first, legacy is created in the string database and can lead to suboptimal translations later.

- It is important before building a system to talk to the people who actually have experience in translation. If you need help, please feel free to turn to us for a free consultation.